Back in August 2009, the idea for a system to deposit research output directly to Institution Repositories (IRs) was formulated (like many other great ideas) on the back of a napkin, and presented in this ‘Basic Premise’ post. Development works on the Open Access Repository Junction finished in March 2011 and were followed a year later by the current project on the Repository Junction Broker (RJ Broker).

Development works have progressed over these last three years and a prototype RJ Broker has been designed but many questions were raised along the way. We decided to take advantage of the attendance of many representatives of the RJ Broker stakeholders at the 7th International Conference on Open Repositories (OR2012) in Edinburgh in July 2012, to refine our vision by using the direct input from key stakeholders. An evening workshop was organised on the 9th July and representatives from our stakeholders: IR managers, funders, publishers, IR software and service developers from the UK, Europe, US and Australia were invited to take part. The summary of this brainstorming is presented here.

RJ Broker: A delivery service for research output

The RJ Broker Team first set the scene with a short presentation on the current and intended RJ Broker functionality.

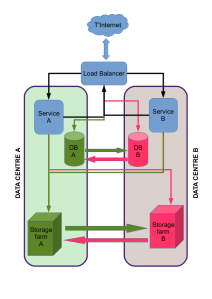

The RJ Broker is in effect a delivery service for research output. It accepts deposits from data providers (institutional and subject repositories, funder and publisher systems). For each deposit, it uses the metadata for the deposit to identify organisations and any associated repositories that are suitable for receiving the deposit. It then transfers the deposit to the repositories that have registered with the RJ Broker service. The metadata acts as the address card for the deposit which is the parcel.

In order to receive deposits from the RJ Broker, IRs have to register their SWORD credentials with the service to give the RJ Broker an access point to their systems for data input, like the letter box lets mail in the house.

At this stage, the focus for the type of deposit (or content of the parcel) is research publications. However, the way the RJ Broker works is independent of the deposit type. The RJ Broker will transfer parcels of any type, big or small. For example, a deposit can be a publication with several supporting data files, just an article or just the data files. The parcel can be empty or even taped shut. Indeed in the case of an article published under Gold Open Access, the address card is the only information needed to provide a notification of the availability of that article in the publisher system. When an article is subject to an embargo period, a sealed parcel is required to enable the delivery to take place straight away even if it can only be opened later, like the presents sent by far away relatives placed under the tree to be opened on Christmas day!

The mind map below was used to inform the discussion of all the questions we were seeking to answer.

Metadata: The all important label

Like the address card on the top of a parcel, the metadata is available for all to see. Indeed the metadata is always fully open access regardless of the embargo period imposed on the data itself. The metadata is owned by the person who creates it (author, publisher or IR manager) but there is no copyright on it. The metadata can even be considered to act as a advertissement flyer for the data itself which benefits its owner (whether author, publisher or IR manager) and therefore explains why owners support open access for metadata.

Standards are generally a good thing, improving quality and facilitating exchange. For example, the use of a funders’ code field in the metadata would significantly ease reporting on return on investment for the funding agencies. Several metadata standards are currently being developed, for example CERIF, RIOXX, COUNTER or the OpenAIRE Guidelines. The RJ Broker will support these standards but it is not its duty to ensure these standard are adhered too or that for example all required fields have been entered. In the same way as one expects an address card to provide enough space for the required information to be supplied in order for the parcel to be delivered, one does not expect the postman to fill in a missing house number or any other missing information.

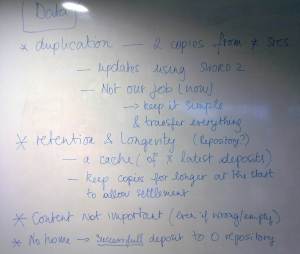

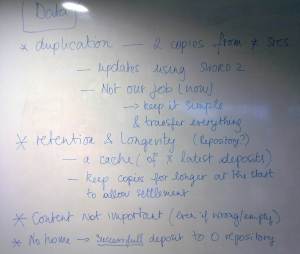

Deposit: What is in that parcel?

The RJ Broker has to assume some responsibility for the object it is trusted with transferring to IRs, mainly the correct identification of appropriate IRs and the subsequent delivery to these IRs. The RJ Broker is also responsible for the safe keeping of the deposit while it is in transit.

Once it has been successfully transferred to the registered IRs, the responsibility of the RJ Broker ends. It may seem tempting to extend the functonality of the RJ Broker to store a copy of every deposit in order to allow later downloads by newly registered IRs or simply to provide a safety backup. However, this is not the purpose of a delivery service. This would also turn the RJ Broker into a repository that could grow to a massive size. Therefore the RJ Broker will only keep recently transferred deposits for a limited period of time to allow IRs time to accept and process these deposits. Similarly, the postman is not required to scan each postcard he delivers for future safe keeping but undelivered items will be returned to the sorting office and held for a while to allow collection.

If none of the identified IRs have registered with the RJ Broker then no delivery is possible. This constitutes a successfull processing of a deposit for the RJ Broker. Future developments cou;ld consider transferring the deposit to open repositories like OpenDepot.org or sending a notification to IRs to advise them to register with the RJ Broker should they wish to receive direct deliveries of research output from the RJ Broker.

The RJ Broker will transfer every deposit it receives. It does not provide an inspection or validation service. Therefore will not flag an empty, a duplicate, incomplete or badly formatted deposit.

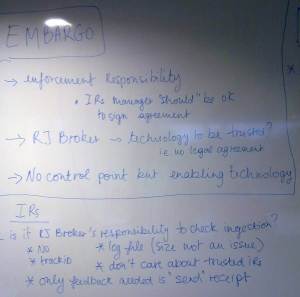

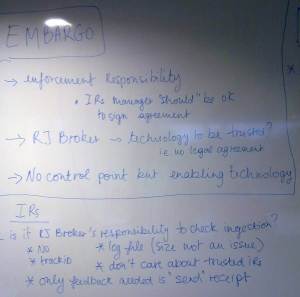

Dealing with embargo

The RJ Broker aims to support Open Access (OA) by enabling the dissemination of the reseach output across the UK and beyond. It does not matter for the delivery process whether this OA is gold or green. However, it is important that any embargo period is dealt with appropriately.

The RJ Broker aims to support Open Access (OA) by enabling the dissemination of the reseach output across the UK and beyond. It does not matter for the delivery process whether this OA is gold or green. However, it is important that any embargo period is dealt with appropriately.

A legal agreement between the RJ Broker and each data provider requesting the respect of embargo periods will be signed before any data from that provider is transferred by the RJ Broker. Each IR will in turn have to accept a similar agreement before they can receive data, through the RJ Broker, from providers enforcing an embargo. Data providers have to ensure that embargo periods are correctly noted in the metadata. IRs have to respect any embargo specified in the metadata. The RJ Broker acts as a trusted, enabling technology between both parties, not as a control point, it does not have any responsibility regarding the enforcement of embargos. Legal agreements are currently being set in place for the early adopters of the RJ Broker. The hope is that a set of standard agreements can be derived from these to promote take up and ease the administration process.

Beside the legal agreement, the RJ Broker will not perform additional checks or require further certification or accreditation from IRs. The aim of the RJ Broker is to disseminate research output widely. It is not its purpose to rate IRs for trust or reliability which is best left to the appropriate authorities.

Tracking a Deposit

The RJ Broker assigns a tracking ID to each deposit which enables data suppliers to check on the onward progress of the deposit after it was successfully delivered to an IR SWORD endpoint.

As mentioned previously, the responsibility of the RJ Broker ends once the deposit has been successfully transferred to the registered IRs. Institutions follow different procedures, workflows and timetables when it comes to processing deposits left for inclusion in their repositories. Therefore asserting that a deposit has been successfully ingested by an IR is a complexe task which is not part of the RJ Broker’s remit as a delivery service. However, the RJ Broker will provide the data suppliers with a send receipt, as a proof that the deposit has been processed by the RJ Broker, which includes a tracking ID. The data supplier can later use this ID to check on the status of the deposit with the IRs in which it has been transferred, i.e. received, queued for processing, accepted, live or rejected.

Keep it simple!

The discussion was very productive, all topics set in our mind map were covered and answers to all questions regarding the functionality of the RJ Broker were agreed. The unanimous conclusion was to keep it simple!

The RJ Broker should aim to be a delivery service only. It will follow a “push only” model. Deposits will be pushed to the RJ Broker by data suppliers and the RJ Broker will push the deposits to the IRs. This enables the RJ Broker to have a streamlined workflow.

Specifically, the RJ Broker will NOT:

- provide any reporting or statistics

- filter incoming data

- improve data or metadata

- enforce standard compliance

- be a repository

- collect (“pull”) data from suppliers

I would like to thank everyone who took part in the workshop and help us shaped the functionality of the future RJ Broker service! Development and trials are on-going with a first version of the RJ Broker due for release to UK RepositoryNet+ in Spring 2013. Watch this space!

List of Attendees

Tim Brody (University of Southampton, UK), Yvonne Budden (University of Warwick , UK), Thom Bunting (UKOLN, UK), Peter Burnhill (UK RepositoryNet+, UK), Pablo de Castro Martin (UK RepositoryNet+, UK), Andrew Dorward (UK RepositoryNet+, UK), Kathi Fletcher (Shuttleworth Foundation, USA), Robert Hilliker (Columbia Univeristy, USA), Richard Jones (Cottage Labs, UK), Stuart Lewis (The University of Edinburgh, UK), John McCaffery (University of Dundee, UK), Paolo Manghi (OpenAIRE, Italy), Muriel Mewissen (RJ Broker, UK), Balviar Notay (JISC, UK), Tara Packer (Nature Publishing Group, USA), Marvin Reimer (Shuttleworth Foundation, USA), Anna Shadbolt (University of Melbourne, Australia), Terry Sloan (UK RepositoryNet+, UK), Elin Strangeland (University of Cambridge, UK), Ian Stuart (RJ Broker, UK), James Toon (The University of Edinburgh, UK), Jin Ying (Rice University, USA)